Elasticsearch集群安装

1. 部署介质

elasticsearch-7.5.0-linux-x86_64.tar.gz elasticsearch-analysis-ik-7.5.0.zip

文件上传服务器目录:/opt

2. 环境准备

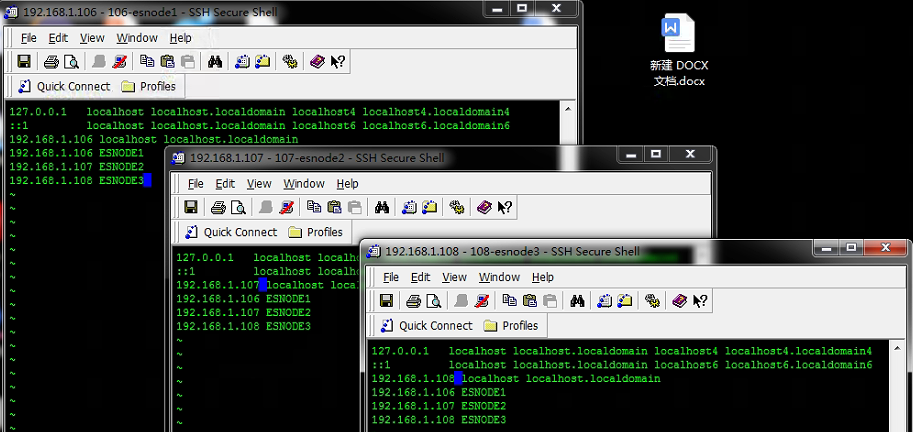

服务器准备

| 序号 | IP | 主机名 | 服务器说明 |

|---|---|---|---|

| 1 | 192.168.1.106 | ESNODE1 | master |

| 2 | 192.168.1.107 | ESNODE2 | slaver |

| 3 | 192.168.1.108 | ESNODE3 | slaver |

JDK要求:

3.增加/etc/hosts设置

192.168.1.106 ESNODE1 ESNODE 192.168.1.107 ESNODE2 ESNODE 192.168.1.108 ESNODE3 ESNODE

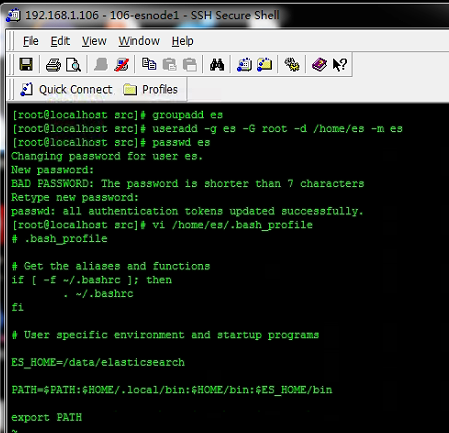

4、创建elasticsearch用户

三台服务器(106/107/108)

su - root chmod 777 /data

groupadd es useradd -g es -G root -d /home/es -m es passwd es

设置环境变量

vi /home/es/.bash_profile

ES_HOME=/data/elasticsearch

PATH=$PATH:$HOME/.local/bin:$HOME/bin:$ES_HOME/bin

export PATH

5、es用户的免密配置

为了保证设置生效最好重启服务器后进行操作,并使用es用户登陆操作以下步骤

[ESNODE1节点]

ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

cd ~/.ssh/

cp id_dsa.pub ESNODE1.pub

cp id_dsa.pub authorized_keys

scp ESNODE1.pub es@ESNODE2:`pwd`

scp ESNODE1.pub es@ESNODE3:`pwd`

[ESNODE2节点]

ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

cd ~/.ssh/

cp id_dsa.pub ESNODE2.pub

cp id_dsa.pub authorized_keys

scp ESNODE2.pub es@ESNODE1:`pwd`

scp ESNODE2.pub es@ESNODE3:`pwd`

[ESNODE3节点]

ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

cd ~/.ssh/

cp id_dsa.pub ESNODE3.pub

cp id_dsa.pub authorized_keys

scp ESNODE3.pub es@ESNODE1:`pwd`

scp ESNODE3.pub es@ESNODE2:`pwd`

确认相互免密ssh登录

[ESNODE1节点]

cat ESNODE2.pub >> authorized_keys

cat ESNODE3.pub >> authorized_keys

[ESNODE2节点]

cat ESNODE1.pub >> authorized_keys

cat ESNODE3.pub >> authorized_keys

[ESNODE3节点]

cat ESNODE1.pub >> authorized_keys

cat ESNODE2.pub >> authorized_keys

6、部署elasticsearch

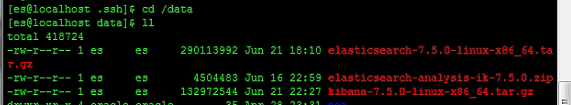

6.1、上传elasticsearch并解压缩

在192.168.1.106上使用es用户登陆操作以下步骤 cd /data

上传文件到data目录

cd /data

tar -zxvf elasticsearch-7.5.0-linux-x86_64.tar.gz

#目录名修改为elasticsearch

mv elasticsearch-7.5.0 elasticsearch

#确认文件的拥有者为es用户

#将elasticsearch-analysis-ik-7.5.0.zip解压到elasticsearch的plugins目录下

mv elasticsearch-analysis-ik-7.5.0.zip elasticsearch/plugins/

cd /data/elasticsearch/plugins/

unzip elasticsearch-analysis-ik-7.5.0.zip

mv elasticsearch-analysis-ik-7.5.0 analysis-ik

rm -f elasticsearch-analysis-ik-7.5.0.zip

6.2、创建elasticsearch的数据存储目录和日志存储目录:

mkdir -p /data/elasticsearch/data

mkdir -p /data/elasticsearch/logs

6.3、修改es集群的配置参数

[ESNODE1节点]

vi config/elasticsearch.yml

cluster.name: cfesb-esCluster

node.name: ESNODE1

path.data: /data/elasticsearch/data

path.logs: /data/elasticsearch/logs

network.host: 192.168.1.106

http.port: 9200

#es7.x之后新增的配置,写入候选节点的设备地址,在开启服务后可以被选为主节点

discovery.seed_hosts: ["ESNODE1", "ESNODE2", "ESNODE3"]

#es7.x之后新增的配置,初始化一个新的集群时需要此配置来选举master

cluster.initial_master_nodes: ["ESNODE1", "ESNODE2", "ESNODE3"]

#是否支持跨域

http.cors.enabled: true

#*表示支持所有域名

http.cors.allow-origin: "*"

6.4、修改JVM配置

vi config/jvm.options

不能超过物理内存的50%

-Xms16g -Xmx16g

6.5、复制目录到其他节点

cd /data

scp -r elasticsearch es@ESNODE2:`pwd`

scp -r elasticsearch es@ESNODE3:`pwd`

[ESNODE2节点]

node.name: ESNODE2

network.host: 192.168.1.107

[ESNODE3节点]

node.name: ESNODE3

network.host: 192.168.1.108

6.6、修改系统参数

在192.168.1.106/107/108上使用root用户登陆操作以下步骤

vi /etc/security/limits.conf

* soft nofile 65536

* hard nofile 65536

* soft nproc 516254

* hard nproc 516254

* soft memlock unlimited

* hard memlock unlimited

vi /etc/sysctl.conf

vm.max_map_count=262144

sysctl -p

7、启动elasticsearch

每个节点上运行:

su - es

/data/elasticsearch/bin/elasticsearch -d

8、创建ESB索引

curl -X PUT http://192.168.1.106:9200/esb?pretty

9、部署Kibana

9.1、上传Kibana并解压缩

[ESNODE1节点]

su - es

cd /data

tar -zxvf kibana-7.5.0-linux-x86_64.tar.gz

mv kibana-7.5.0-linux-x86_64 kibana

cd kibana

mkdir logs

#修改config/kibana.yml配置文件

vi config/kibana.yml

server.host: "192.168.1.106"

server.maxPayloadBytes: 1048576000

server.name: "ESNODE1"

elasticsearch.hosts: ["http://ESNODE:9200"]

logging.timezone: Asia/Shanghai

9.2、启动Kibana

su - es

cd /data/kibana

#创建启动脚本startKibana.sh

vi startKibana.sh

nohup ./bin/kibana > logs/kibana.log &

#修改权限

chmod 755 startKibana.sh

#启动

./startKibana.sh

9.3、访问地址

10、head安装

head插件在其中有个节点安装就行,这里我们安装在192.168.1.106主节点上

1.下载head插件

下载地址:https://github.com/mobz/elasticsearch-head

这里我们下载 elasticsearch-head-master.tar.gz

2.解压安装包

tar -zxvf elasticsearch-head-master.tar.gz 之后,将会出现一个elasticsearch-head-master文件夹

3.安装所需环境

安装head插件前需要先安装nodejs和用nodejs安装grunt

使用es用用

3.1下载nodejs

cd /data

wget https://nodejs.org/dist/v10.9.0/node-v10.9.0-linux-x64.tar.xz

#解压

tar xf node-v10.9.0-linux-x64.tar.xz

#进入解压目录

cd node-v10.9.0-linux-x64/

#执行node命令 查看版本

./bin/node -v

#移动node到/usr/local目录

mv node-v10.9.0-linux-x64 /usr/local/nodejs

3.2 配置一下nodejs的环境变量

vim /home/es/.bash_profile

#nodejs所在目录

export NODE_HOME=/usr/local/nodejs/bin

export PATH=${NODE_HOME}:$PATH

#修改后及时生效

source /home/es/.bash_profile

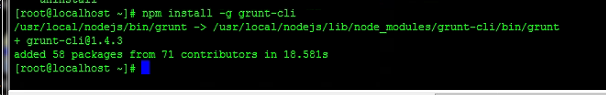

3.3 安装grunt

npm install -g grunt-cli

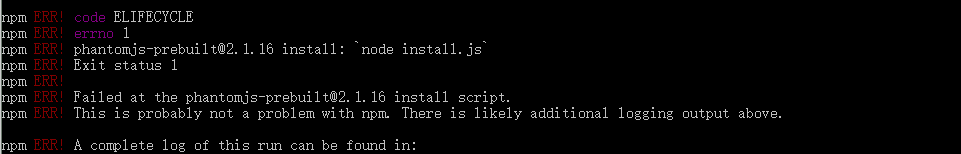

如果遇到如下错误:

解决办法:

你报错什么版本,就对应什么版本

npm install phantomjs-prebuilt@2.1.16 --ignore-scripts

3.4 修改head配置

这个步骤做好后我们进入到elasticsearch-head目录

修改服务器监听地址(在目录elasticsearch-head-master/Gruntfile.js)修改如下部分内容:

connect: {

server: {

options: {

hostname: '0.0.0.0',

port: 9100,

base: '.',

keepalive: true

}

}

}

});

修改连接地址:elasticsearch-head/_site/app.js

this.base_uri = this.config.base_uri || this.prefs.get("app-base_uri") || "http://localhost:9200";

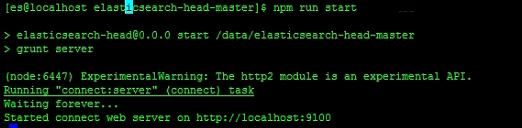

3.5 启动head

进入到elasticsearch-head执行如下命令启动head插件

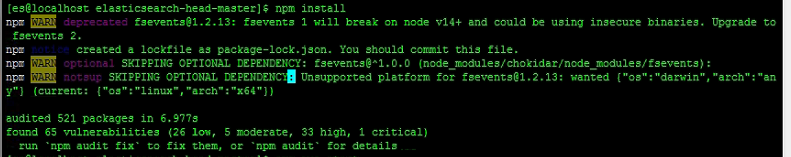

npm install

如下图所示,即成功;

npm run start 或 nohup npm run start > nohup,out 2>&1 &(后台启动)

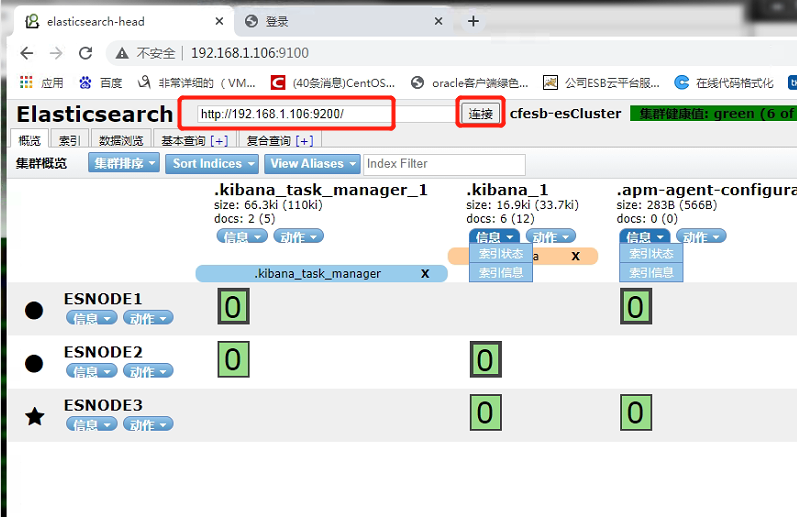

启动成功后就可以通过http://192.168.1.106:9100即可访问:

访问如下:

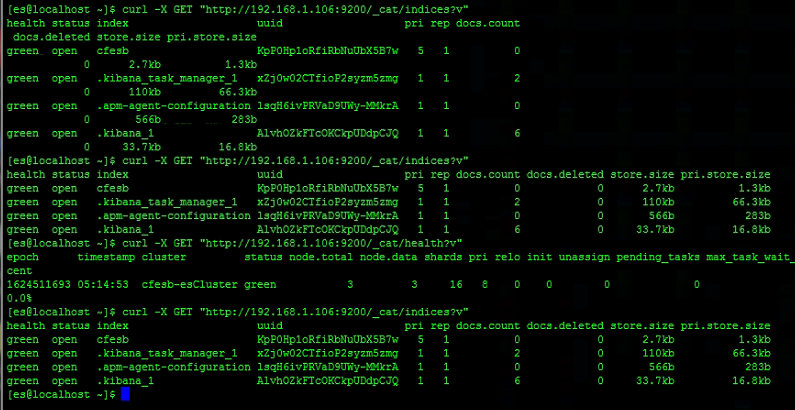

11、elasticsearch常用命令:

elasticsearch访问地址:http://192.168.1.106:9200

#查看节点列表

curl - X GET "http://192.168.1.106:9200/_cat/nodes?v"

curl - X GET "http://192.168.1.106:9200/_cat/health?v"

#查看全部索引

curl - X GET "http://192.168.1.106:9200/_cat/indices?v"

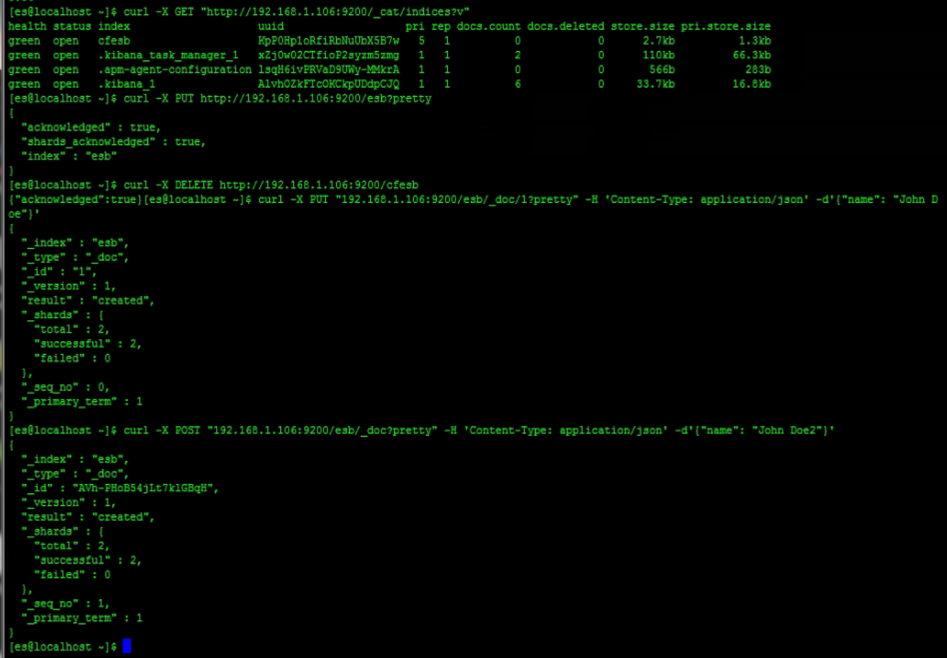

#创建索引

curl -X PUT http://192.168.1.106:9200/esb?pretty

#删除索引

curl -X DELETE http://192.168.1.106:9200/cfesb

#创建文档

curl -X PUT "192.168.1.106:9200/esb/_doc/1?pretty" -H 'Content-Type: application/json' -d'{"name": "John Doe"}'

curl -X POST "192.168.1.106:9200/esb/_doc?pretty" -H 'Content-Type: application/json' -d'{"name": "John Doe2"}'

#查看mapping

curl - X GET "http://192.168.1.106:9200/esb/_mapping"

#检索文档

curl -X GET "192.168.1.106:9200/esb/_doc/1?pretty"

#删除文档

curl -X DELETE "192.168.1.106:9200/esb/_doc/1?pretty"

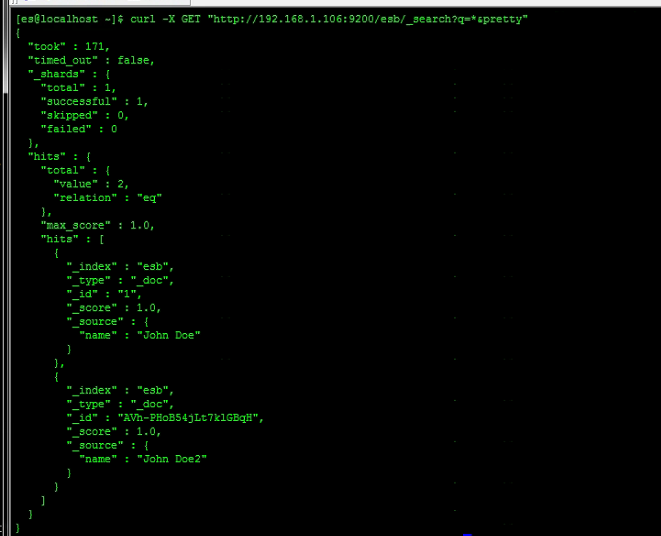

#查找数据

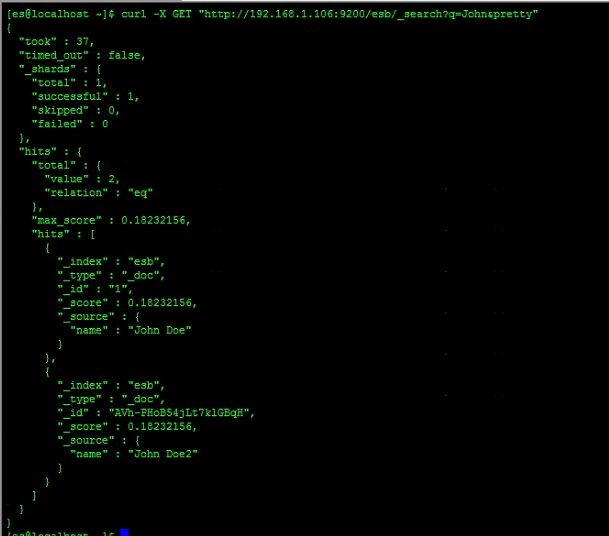

#查找数据

curl -X GET "http://192.168.1.106:9200/esb/_search?q=*&pretty"

curl -X GET "192.168.1.106:9200/esb/_search?q=John&pretty"

存放路径: /data/elasticsearch/data/nodes/0/indices/ngLAVeDQScyZorYJATZ41w/0/index

API操作—— 创建映射关系 接下来的操作用curl比较不方便,复杂的内容使用PostMan进行操作,创建映射关系要使用PUT请求,如下json代码代表我定义了三个字段,分别是title,url和doc,他们的type属性即为数据类型,关于elasticsearch的数据类型这里不过多赘述,analyzer为ik_max_word代表使用了ik分词器的最粗粒度分词

PUT esb/_mapping

{

"properties": {

"title": {

"type": "text",

"analyzer": "ik_max_word"

},

"url": {

"type": "text"

},

"doc": {

"type": "text",

"analyzer": "ik_max_word"

}

}

}

Reponse:

curl -X PUT "192.168.1.106:9200/esb/_mapping?pretty" -d '

{

"properties": {

"businessKeyValues": {

"type": "text"

},

"endTimeStamp": {

"type": "date","format": "yyyy-MM-dd HH:mm:ss:SSS"

},

"interfaceName": {

"type": "keyword"

},

"osbHost": {

"type": "keyword"

},

"osbStatusCode": {

"type": "text"

},

"partnerTimeStamp": {

"type": "text"

},

"requestMessage": {

"type": "text"

},

"responseMessage": {

"type": "text"

},

"source": {

"type": "keyword"

},

"startTimeStamp": {

"type": "date","format": "yyyy-MM-dd HH:mm:ss:SSS"

},

"target": {

"type": "keyword"

},

"transactionId": {

"type": "text"

},

"type": {

"type": "keyword"

},

"elapseTime": {

"type": "long"

},

"envlopSize": {

"type": "long"

},

"osbMessage": {

"type": "text"

},

"createDate": {

"type": "date","format": "yyyy-MM-dd HH:mm:ss"

}

}

}

修改最大索引偏移

http://192.168.1.106:9200/esb/_settings

{

"index" : {

"highlight.max_analyzed_offset" : 100000000

}

}

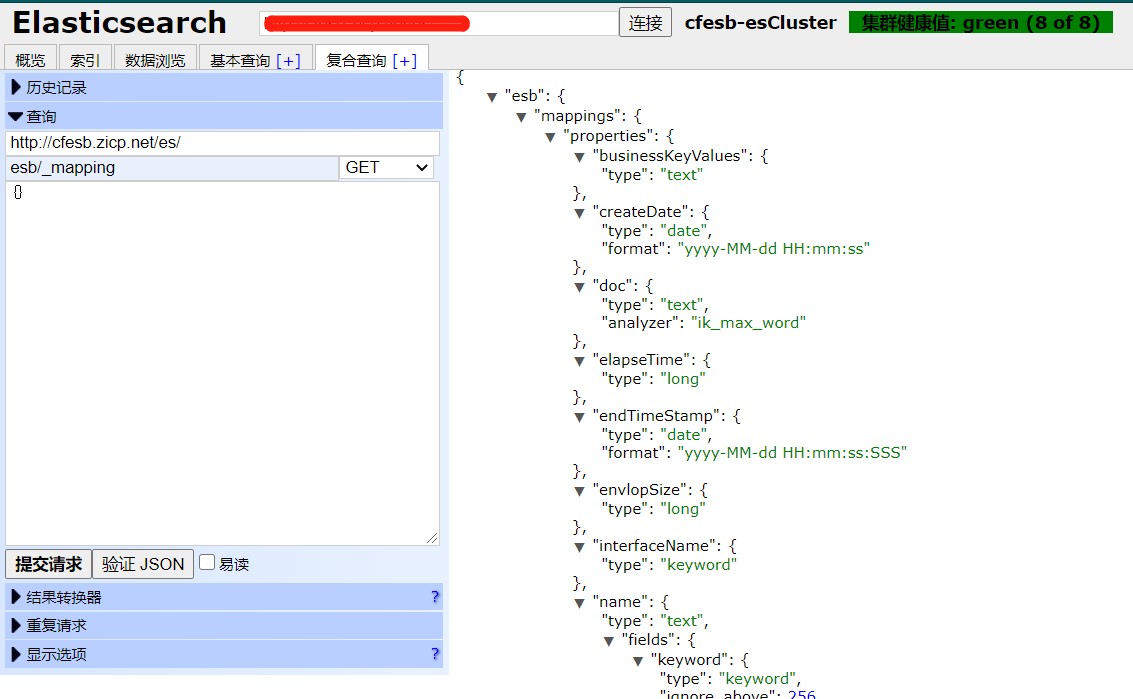

API操作—— 查询映射关系 查询索引库的映射关系要使用GET请求,如下我查询了上面创建的映射关系

curl -XGET '192.168.1.106:9200/esb/_mapping'

Response:

条件查询示例1:curl -X POST '192.168.1.106:9200/esb/_search'

{

"query": {

"bool": {

"must": [

{

"match": {

"interfaceName": "PS_DFHGZ_offlineVehicle"

}

},

{

"match": {

"target": "RCDCS"

}

}

]

}

}

}

条件查询示例2

{

"query": {

"bool": {

"must": [

{ "match": { "interfaceName": "PS_SAP_OrderRelease"} },

{"match": { "requestMessage": "001000226497" }},

{"match": { "target": "LES" }},

{"match": { "type": "PS" }}

],

"filter":{

"range":{"startTimeStamp":{"from": "2014-01-02 07:13:27:534","to": "2029-11-04 07:13:27:534"}}

}

}

},

"sort": [

{"startTimeStamp": {"order": "desc"}}

]

}

条件查询示例3

{

"query": {

"bool": {

"must": [

{

"term": {"interfaceName": "PS_SCM_D07A_DESADV"}

},

{

"range": {"startTimeStamp": {"from": "2020-01-02 07:13:27:534","to": "2020-01-04 07:13:27:534"}}

},

{

"match": {"requestMessage" : {"query" : "金康","analyzer" : "ik"}}

}

],

"must_not": [],

"should": []

}

},

"from": 0,

"size": 10,

"sort": []

}

删除文档(可以按照时间范围删除旧文档)

curl -X POST "192.168.1.106:9200/esb/_delete_by_query" -H 'Content-Type: application/json' -d

{

"query": {

"range": {"startTimeStamp": {"from": "2020-01-02 07:13:27:534","to": "2020-01-04 07:13:27:534"}}

}

}

curl -X POST '192.168.1.106:9200/esb/_forcemerge?only_expunge_deletes=true&max_num_segments=1'

例如:删除PS_SAP_BOM2DFERP接口日志

{

"query": {

"bool": {

"must": [

{

"match_phrase": {

"interfaceName": "PS_SAP_BOM2DFERP"

}

}

],

"filter": {

"range": {

"startTimeStamp": {

"from": "2010-01-01 00:00:00:000",

"to": "2020-06-30 23:59:59:999"

}

}

}

}

}

}

样例报文1

{

"osbStatusCode" : "S",

"source" : "SAP",

"type" : "PS",

"transactionId" : "005056977A9E1EDA8ADC088CD9BB7606",

"target" : "DFSCM",

"requestMessage" : "qqqqqqq中国 dd",

"startTimeStamp" : "2019-12-30 08:41:35:223",

"businessKeyValues" : "MonBusinessKeyNeddChange",

"endTimeStamp" : "2019-12-30 08:41:35:517",

"interfaceName" : "PS_SAP_PO2DFSCM",

"responseMessage" : "响应001 湖南",

"partnerTimeStamp" : "2019-12-30 08:42:10",

"osbHost" : "osb_s2_1"

}

样例报文2

{

"osbStatusCode" : "S",

"source" : "SAP",

"type" : "PS",

"transactionId" : "005056977A9E1EDA8ADC088CD9BB7608",

"target" : "MOM",

"requestMessage" : "aaaaqqq人民 日报",

"startTimeStamp" : "2019-12-30 18:49:35:223",

"businessKeyValues" : "MonBusinessKeyNeddChange",

"endTimeStamp" : "2019-12-30 18:49:35:517",

"interfaceName" : "PS_SAP_OrederRelease",

"responseMessage" : "响应002 重庆",

"partnerTimeStamp" : "2019-12-30 18:52:10",

"osbHost" : "osb_s1_1"

}

样例报文3

{

"osbStatusCode" : "S",

"source" : "SAP",

"type" : "PS",

"transactionId" : "00505697584E1EDA8CD235C86029D716",

"target" : "SCM",

"requestMessage" : "<ZSAP_PD_SCM_PO_OUT></ZSAP_PD_SCM_PO_OUT>",

"startTimeStamp" : "2020-01-09 03:39:07:000",

"businessKeyValues" : "MonBusinessKeyNeddChange",

"endTimeStamp" : "2020-01-09 03:39:07:000",

"interfaceName" : "PS_SAP_PurchaseOrder2SCM",

"responseMessage" : "<urn:ZSAP_PD_SCM_PO_OUT_RESPONSE/>",

"partnerTimeStamp" : "2020-01-09 03:39:50",

"osbHost" : "osb_s4_1"

}

常见错误

启动es时,可能出现:Option UseConcMarkSweepGC

OpenJDK 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version 9.0 and will likely be removed in a future release.

警告信息,这是提醒你 cms 垃圾收集器在 jdk9 就开始被标注为 @deprecated

解决方式:修改config/jvm.options 将 : -XX:+UseConcMarkSweepGC 改为:-XX:+UseG1GC

log4j无权限引起

如果报log4j错误,可能是es目录上的logs下文件没有权限

ERROR Unable to invoke factory method in class org.apache.logging.log4j.core.appender.RollingFileAppender for element RollingFile: java.lang.IllegalStateException: No factory method found for class org.apache.logging.log4j.core.appender.RollingFileAppender java.lang.IllegalStateException: No factory method found for class org.apache.logging.log4j.core.appender.RollingFileAp 解决方法

使用root给文件赋权

su - root

cd /data/elasticsearch/logs

chown -R es:es *

不能加载IK插件

Could not load plugin descriptor for plugin directory [elasticsearch-analysis-ik-7.5.0.jar]

解决方法:插件文件必须 位于 plugin/analysis-ik

mkdir analysis-ik

cd analysis-ik/

mv /data/elasticsearch/plugins/* .

elasticsearch-head 错误处理

npm install 错误

出现npm WARN elasticsearch-head@0.0.0 license should be a valid SPDX license expression

处理方法

打开elasticsearch-head-master下的package.json, 修改license为Apache-2.0

© 2021 CFESB.CN all right reserved,powered by Gitbook 本文档更新于: 2021-07-06 16:26